Insights

India’s Strategic Response to Misinformation and Deepfake

Introduction

Introduction

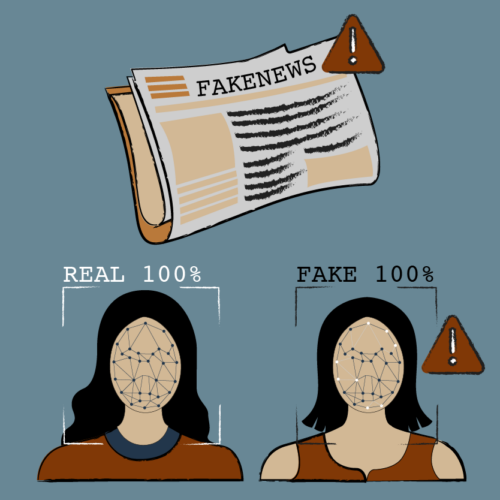

Deepfakes are a growing concern throughout the world including India, which can be used for several purposes, both innocuous or malicious. The dangers of deepfakes were starkly highlighted during the 2024 Indian election cycle when a Tamil-language video purportedly featuring Duwaraka, the deceased daughter of Tamil Tiger leader Velupillai Prabhakaran surfaced online sparking emotional responses in Tamil Nadu. Fact-checker Muralikrishnan Chinnadurai quickly identified it as an AI-generated deepfake noting glitches that betrayed its artificial nature. The ease with which deepfakes can exploit sensitive issues particularly during elections where misinformation spreads rapidly were striking. Beyond localized manipulations, deepfake content has even targeted prominent political figures and individuals such as Rashmika Mandana, Sachin Tendulkar and even altered speeches by leaders like Prime Minister Modi.

All these developments led to the legal and regulatory responses by the Indian Government to mitigate these threats and as a result, the Ministry of Electronics and Information Technology (MeitY) issued advisories emphasizing intermediary obligations under the IT (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, highlighting the need for a comprehensive legal framework to address this growing concern.

The term “Deepfake” is derived from “Deep Learning (DL)” and “Fake,” and it describes specific photo-realistic video or image contents created with DL’s support. This word was named after an anonymous Reddit user in late 2017, who applied deep learning methods for replacing a person’s face in pornographic videos using another person’s face and created photo-realistic fake videos. Basically, Deepfake is a process that involves swapping of a face from a person to a targeted person in a video and make the face ex- pressions similar to targeted person and act like targeted person saying those words that were actually said by another person.

Challenges Posed by the Use of Deepfakes

India’s unfortunate reputation as the disseminator of misinformation is linked to its high rate of Internet usage and the growing trend of social media engagement. The country boasts a staggering 323 million internet users, with 67% residing in urban areas and the remaining 33% in rural regions. The lack of media literacy stands out as a pivotal element contributing to the multiplication of misinformation during the pandemic. Throughout the COVID-19 pandemic, false information regarding remedies and therapies spread rapidly through various social media channels in India. One notable case revolved around a widely circulated video promoting the consumption of cow urine as a preventive or curative measure against COVID-19. Despite being discredited by health authorities, the video amassed millions of views and played a role in the dissemination of misinformation, thereby posing potential health hazards as certain individuals embraced and acted upon the inaccurate content.

Moreover, the potential impact of deepfakes on fair elections and democracy as a whole is a matter of great concern. In 2020, India witnessed its first-ever use of AI-generated deepfake technology in political campaigning when several deepfake videos of politician Manoj Tiwari were circulated on WhatsApp groups. These videos depicted Tiwari making accusations towards his political rival Arvind Kejriwal in both English and Haryanvi languages, preceding the elections in Delhi, the Indian capital state. In a diverse and politically sensitive country like India, deepfakes can exacerbate existing communal tensions, incite violence, and erode trust in democratic institutions, leading to social unrest and instability.

Another big threat is in the financial domain, whereby gullible citizens are coaxed or threatened through deep fakes into transferring money into the criminal’s account. In such an instance, in April 2024, a businessman from Mumbai, India, was swindled out of Rupees 80,000 in an AI voice cloning scam. The victim received a call allegedly from the Indian embassy in Dubai, wherein he was informed that his son was arrested and sentenced to imprisonment. He was asked to transfer money for the payment of the bail amount. The businessman believed it as his son’s voice was cloned and hence, transferred the amount to the fraudsters.

The Indian Approach Against Misinformation and Deepfakes

In India, there is currently no legislation specifically addressing the issue of deepfakes. By issuing these directives, the country is taking its first step towards creating comprehensive legislation, as directives typically precede the enactment of formal laws.

The directives issued by MeitY on December 26, 2023, mandated that intermediaries ensure their platforms are not used to share illegal or harmful content, including misinformation and deepfakes. Rule 3(1)(b)(v) of the IT Rules explicitly prohibits the dissemination of false information, requiring intermediaries to remove such content within 36 hours of notification. Platforms must also inform users of penal provisions under the Indian Penal Code (IPC) and the IT Act for violations. Failure to comply makes intermediaries liable under these laws.

Now, obviously the question arises pertaining to what all constitutes Intermediaries for the purpose of this directive. The term Intermediary is defined in the IT Act, 2000 under section 2(1) (w), which defines an intermediary as a person who receives, stores or transmits any electronic record and provides any services relating to that record on behalf of another person. Intermediaries includes network service providers, telecom service providers, internet service providers, search engines, social-media platforms, online payment sites and marketplaces, etc.

Building on its earlier directive, MeitY issued a subsequent directive in March 1st, 2024 regarding the embedding of permanent markers or metadata in AI-generated content such as deepfakes in order to enable traceability to the original creator or source by leaving a digital foot-print.

The Information Technology Act, 2000 (IT Act), serves as the primary legislation governing various offenses in the digital domain including those involving privacy violations, online fraud and the misuse of technology. The implications of this Act are significant, particularly when it comes to emerging threats like deepfakes. Section 66E of the IT Act of 2000 is applicable in cases pertaining to deepfake offenses encompassing the capturing, dissemination or transmission of an individual’s visual representations through mass media, thereby infringing upon their right to privacy. This transgression carries the potential penalty of imprisonment for a duration of three years or a monetary fine amounting to ₹2 lakh. Another pertinent provision within the IT Act is Section 66D. It encompasses the legal framework to prosecute individuals who employ communication devices or computer resources with malicious intentions aiming to deceive or assume the identity of another person, thereby exposing them to the prospect of incarceration for a period of three years and/or a monetary fine up to ₹1 lakh. These particular sections of the IT Act can be invoked to hold accountable those individuals implicated in deepfake cybercrimes within the jurisdiction of India.

However, Section 79 of IT Act, 2000 often referred to as the “safe harbour” provision, provides certain protections to intermediaries. According to this section, intermediaries like social media platforms and internet service providers are not held liable for user-generated content unless they have actual knowledge of illegal content and fail to act upon it. Despite that, with MeitY’s directives on deepfakes, intermediaries are now under increased responsibility to ensure that harmful content is swiftly removed upon notification. This includes the requirement to take down deepfake videos that may violate privacy or spread misinformation. It also places an obligation on intermediaries to comply with government orders and failure to adhere to these obligations can lead to penalties and loss of safe harbour protection. In light of the growing prevalence of deepfakes, this provision needs to be carefully considered as it could either shield or expose the platforms in default.

Conclusion

India’s strategic response to misinformation and deepfakes, as outlined by MeitY, marks a significant step toward safeguarding digital trust and privacy. However, the complexities of the issue require continuous adaptation and global collaboration. Although India currently lacks specific legislation that directly pertains to the matter of deepfakes, there exist legal provisions and government initiatives that hold the potential to address this concern. As the prevalence and sophistication of deepfakes continue to increase, it is probable that the Indian government will take additional measures to tackle this issue and safeguard individuals from potential harm.